Samuel LiI am a M.S. in Robotics student at the CMU Robotics Institute advised by Katia Sycara. I am also researcher at Wayve AI as part of the Embodied Foundation Models team, supervised by Vijay Badrinarayanan and Thomas Kollar. I earned my B.S. in Mathematics & Computer Science from the UIUC, where I conducted computer vision research under Yuxiong Wang. In early research experience, I explored machine learning for climate prediction with Ryan Sriver. My research focuses on 3D/4D vision and robotics. I aim to develop spatially intelligent models capable of perceiving and understanding our dynamic physical world. I believe such models—trained on tasks such as reconstruction, pose estimation, tracking—can unlock generalizable representations useful in robotics and beyond. I also work on robot manipulation techniques that integrate LLM-driven world knowledge with spatially-aware symbolic representations. Outside of research and classes, I like to play tennis, cook, backpack, and spend time with my dog and cat. |

|

Research |

|

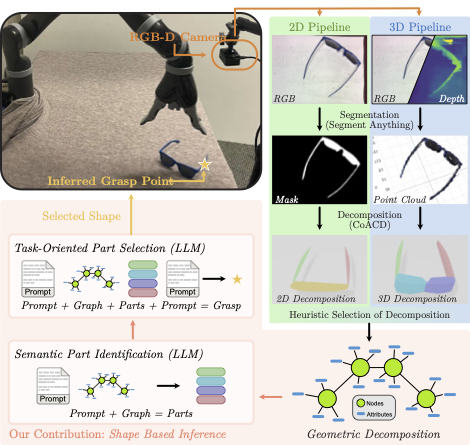

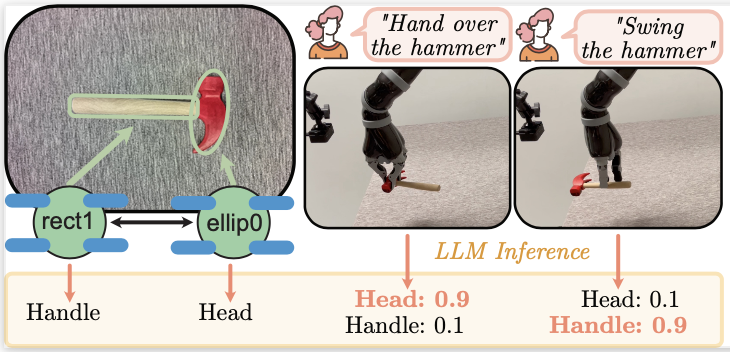

ShapeGrasp: Zero-Shot Task-Oriented Grasping with Large Language Models through Geometric DecompositionSamuel Li, Sarthak Bhagat, Joseph Campbell, Yaqi Xie, Woojun Kim, Katia Sycara, Simon Stepputtis IROS (Oral), 2024 paper / website We develop a novel and efficient zero-shot, task-oriented grasping pipeline constructing a symbolic graph from monocular RGB+D input for fine-grained, shape-based LLM reasoning. |

|

Geometric Shape Reasoning for Zero-Shot Task-Oriented GraspingSamuel Li, Sarthak Bhagat, Joseph Campbell, Yaqi Xie, Woojun Kim, Katia Sycara, Simon Stepputtis ICRA 3D Visual Representations for Robot Manipulation Workshop, 2024 paper We propose a lightweight zero-shot, task-oriented grasping approach utilizing LLMs for part-level semantic reasoning over geometric decompositions. |

|

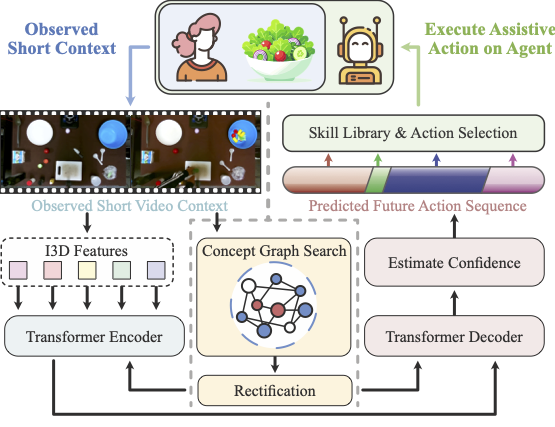

Let Me Help You! Neuro-Symbolic Short-Context Action AnticipationSarthak Bhagat, Samuel Li, Joseph Campbell, Yaqi Xie, Katia Sycara, Simon Stepputtis RA-L, 2024 paper / website We develop a novel modification to the transformer architecture for short-context action anticipation, enabling human-robot collaboration in real-world experiments. |

|

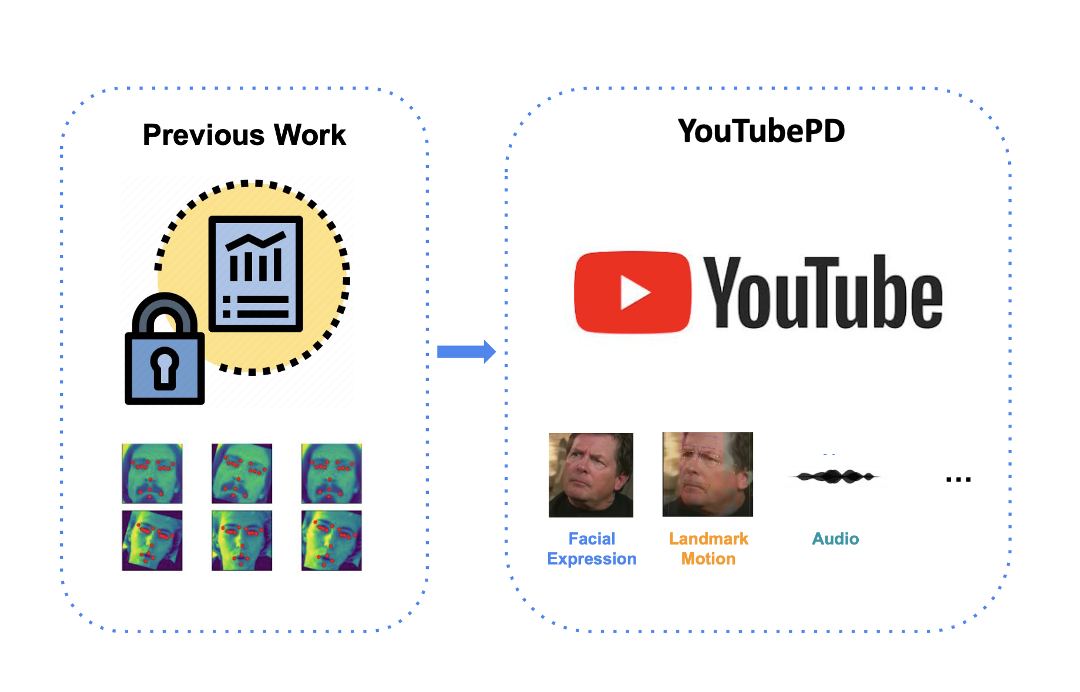

YouTubePD: A Multimodal Benchmark for Parkinson’s Disease AnalysisAndy Zhou*, Samuel Li*, Pranav Sriram*, Xiang Li*, Jiahua Dong*, Ansh Sharma, Yuanyi Zhong, Shirui Luo, Maria Jaromin, Volodymyr Kindratenko, Joerg Heintz, Christopher Zallek, Yuxiong Wang NeurIPS Datasets and Benchmarks Track, 2023 paper / website We introduce the first publicly available Parkinson’s disease analysis benchmark and demonstrate the generalizability of our developed models to real-world clinical settings. |

|

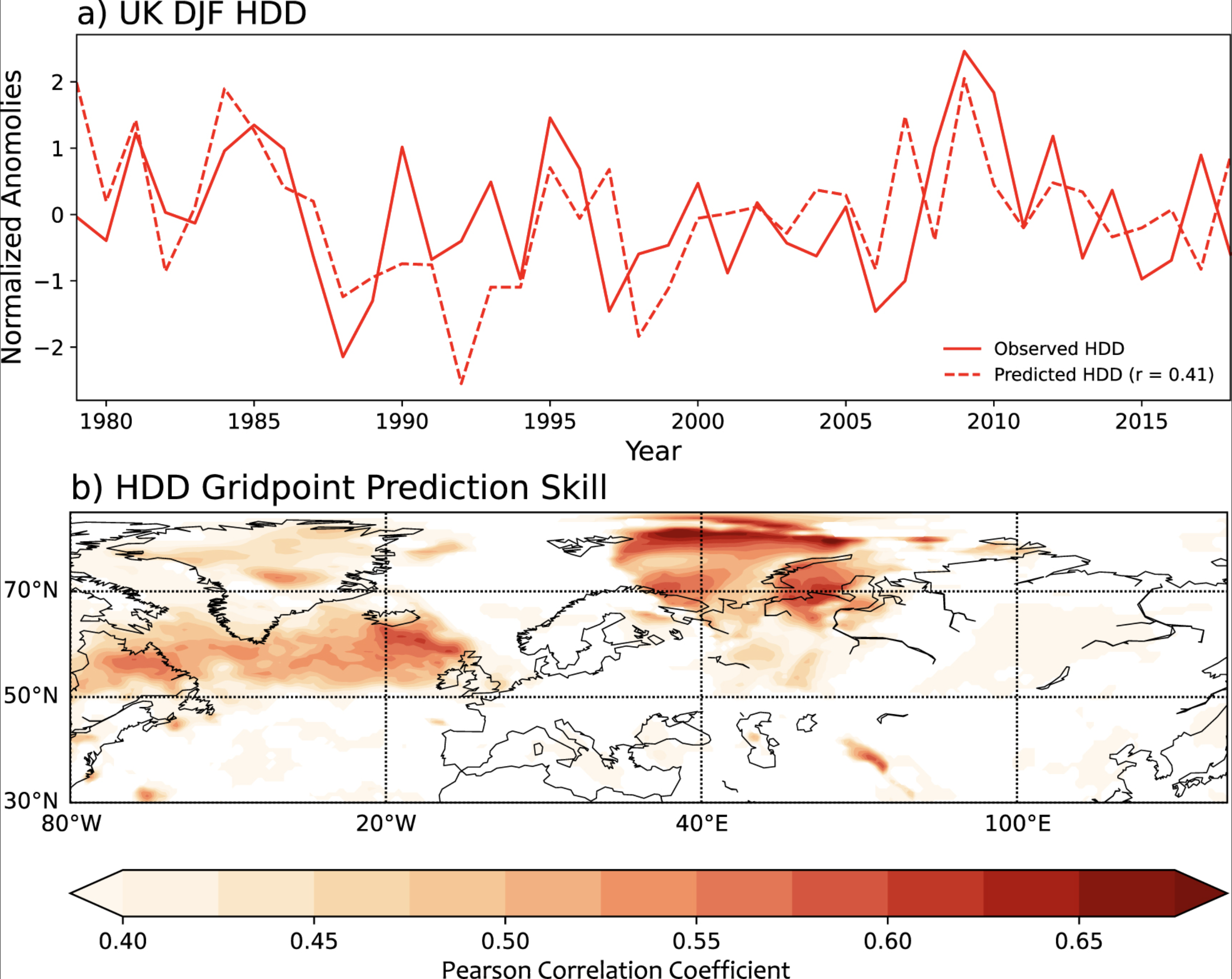

Skillful Prediction of UK Seasonal Energy Consumption based on Surface Climate InformationSamuel Li, Ryan Sriver, Douglas E. Miller Environmental Research Letters, 2023 paper / code We show how winter climate and energy demand values can be predicted two months in advance using surface climate information. |

Projects |

|

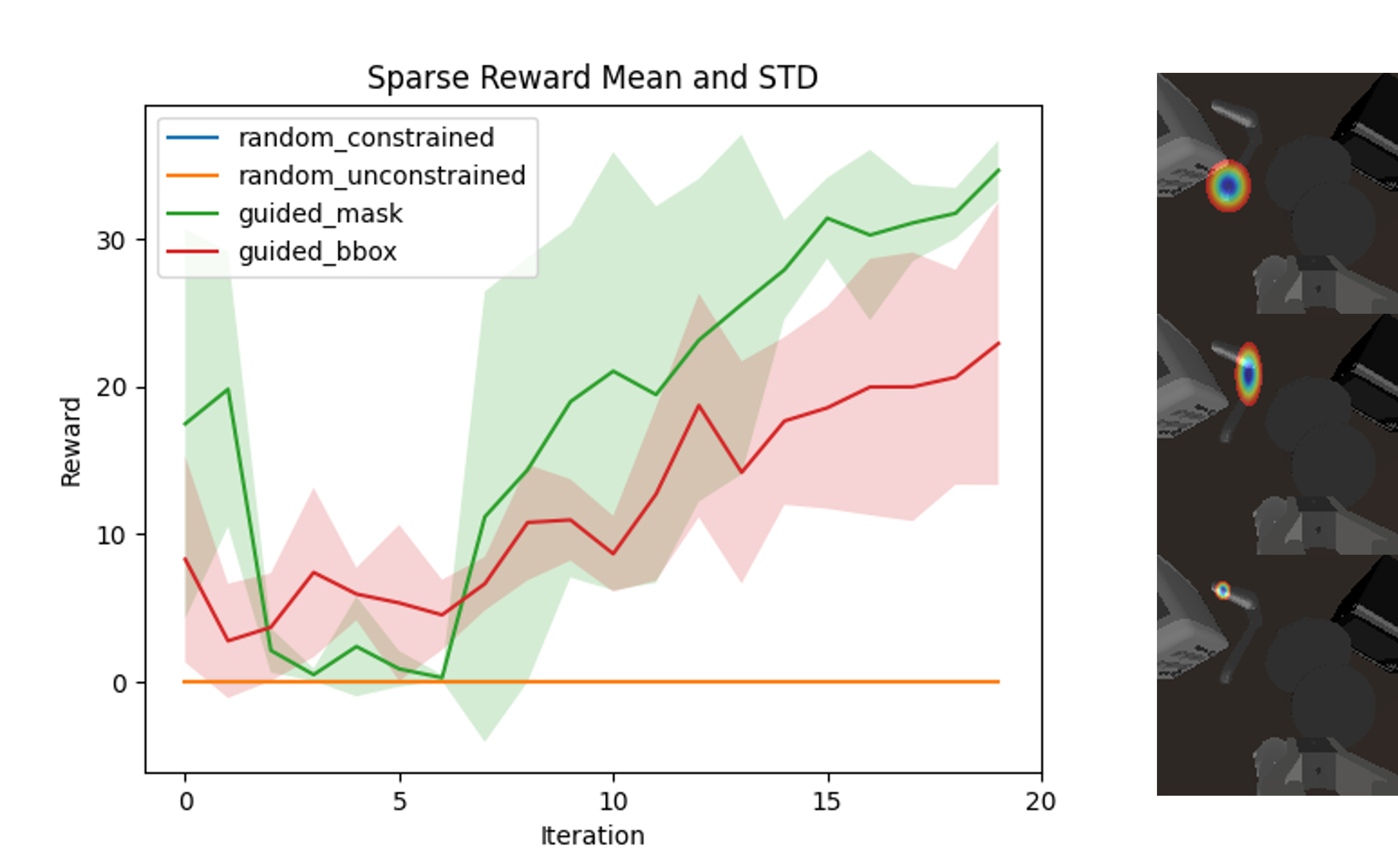

Enhancing Sample Efficiency via Affordance-Based ExplorationCMU 16-745 Optimal Control & Reinforcement Learning 2024-04-23 / poster With Yuchen Zhang, Yunchao Yao, and Yihan Ruan. We solve an optimal control problem on a robotic arm to accurately throw an object to a goal location. |

|

Enhancing Sample Efficiency via Affordance-Based ExplorationCMU 16-831 Intro to Robot Learning 2023-12-16 / paper With Yunchao Yao, we leverage affordance understanding in foundation models for efficient, safe, and aligned task-conditioned exploration and learning for robotic manipulation. |

|

Design and source code from Leonid Keselman's Jekyll fork of Jon Barron's website |